Artifacts Analysis and Mitigation in NLP

Multi-Scales Data Augmentation Approach with ELECTRA Pre-Trained Model.

Abstract

Machine learning models can reach high performance on benchmark natural language processing (NLP) datasets but fail in more challenging settings. We study this issue when pre-trained model learns dataset artifacts in natural language inference (NLI), the topic of studying the logical relationship between a pair of text sequences. We provide a variety of techniques for analyzing and locating dataset artifacts inside the crowdsourced Stanford Natural Language Inference (SNLI) corpus. We study the stylistic pattern of dataset artifacts in the SNLI. To mitigate dataset artifacts, we employ an unique multi-scale data augmentation technique with two distinct frameworks: a behavioral testing checklist at the sentence level and lexical synonym criteria at the word level. Specifically, our combination method enhances our model’s resistance to perturbation testing, enabling it to continuously outperform the pre-trained baseline.

Introduction

Background

Natural Language Inference (NLI) is a fundamental subset of Natural Language Processing (NLP) that investigates whether a natural language sequence premise $p$ can infer (entailment), not imply (contradiction), or remain undetermined (neutral) with respect to a natural language sequence hypothesis $h$.

Large-scale NLI Datasets

To well-understand the semantic representation in NLI, and address the lack of large-scale materials, Stanford NLP groups have introduced the Stanford Natural Language Inference corpus

| Entailment | Premise Hypothesis |

A soccer game with multiple males playing. Some men are playing a sport. |

| Neutral | Premise Hypothesis |

An older and younger man smiling. Two men are smiling and laughing at the cats playing on the floor. |

| Contradiction | Premise Hypothesis |

A man inspects the uniform of a figure in some East Asian country. The man is sleeping. |

SNLI includes 570 k (550 k training pairs, 10 k development pairs, and 10 k test pairs) human-labeled sentence pairs categorized as entailment, contradiction, or neutral for training NLP models in NLI subjects. For the premise of the SNLI corpus, the researchers used captions from the Flickr30k corpus

Entailment $h$ is definitely true given $p$

Neutral $h$ might be true given $p$

Contradiction $h$ is definitely not true given $p$

Understand Dataset Artifacts

Large-scale NLI datasets gathered through crowdsourcing are useful for training and evaluating NLU algorithms. Often, machine learning NLP models can achieve impressive results on these benchmark datasets. However, a new study has shown that NLP models can achieve incredibly high performance even without training on the premise corpus but on hypothesis baseline alone

| Premise | A woman wearing all white and eating, walks next to a man holding a briefcase. |

|

Entailment Neutral Contradiction |

A person eating. Two coworkers cross pathes on a street. A woman eats ice cream walking down the sidewalk, and there is another woman. |

For instance, a closer examination of the SNLI corpus (Table 2) found that crowdsourcing frequently employs a few unexpected annotation strategies. Table 2 displays a single set of three pairings from SNLI: it is quite often to (1) change or omit gender information when generating entailment hypothesis, (2) neutralize the gender information and simply count the number of persons in the premise for neutral pairs, and (3) it is also typical to adjust gender information or only add negation content to create contradiction hypothesis. Consequently, NLP models trained on such datasets with artifacts tend to overestimate performance.

Related Work

Detection on Dataset Artifacts

Several strategies are developed to discover and analyze the dataset to understand its properties and identify any possible issues or dataset artifacts. Statistics such as the mean, standard deviation, minimum, and maximum values for each variable are computed to determine the shape of the data distribution and identify abnormalities. For instance, dataset artifacts may need to be addressed if the mean and standard deviation of a given corpus is significantly different from what statistical theory would predict

One research develops a collection of contrast examples by perturbing the input in various ways and testing the model’s classification of these examples. This highlights the region in which the model may be making incorrect decisions, allowing for the possible correction of dataset artifacts that contribute to these inaccuracies

Another way is the checklist test, which involves creating a series of tests that cover a wide variety of common behaviors that NLP models should be able to handle, and then evaluating the model on these trials to see whether it has any artifacts

To evaluate model performance and identify and fix the observed dataset artifacts, Poliak et al.

Other studies set up adversarial examples that challenge the NLI model’s reliance on syntactic heuristics

Address Dataset Artifacts

There is more than one approach for dealing with the artifacts in the dataset. When the available training data for a natural language processing (NLP) model is insufficient, it can be supplemented with “adversarial datasets,” which are similar to “adversarial challenge sets” in that they are generated by perturbing and transforming the original corpus on various levels to generate additional sets for NLP model learning

Our Implementation Details

Pre-Trained Model

We implement the Efficiently Learning an Encoder that Classifies Token Replacements Accurately (ELECTRA, small) as our pre-trained model in this paper. ELECTRA is designed to improve the performance of BERT

The ELECTRA-small model is a variant of the original ELECTRA model that is more compact and needs fewer computing resources during training and deployment. Despite its small size, the ELECTRA-small model is capable of achieving the same performance as the standard ELECTRA model for certain jobs. Therefore, it is preferable for use cases with limited computer resources.

Dataset Artifacts Analysis

Hypothesis-only SNLI

A hypothesis-only baseline, which only employs the hypothesis phrases of a dataset without the matching premise sentences, is one technique to investigate dataset artifacts in natural language inference. By analyzing the performance of a model trained on a hypothesis-only dataset, it is possible to identify potential artifacts or biases in the dataset that affects the model’s performance.

Poliak et al.

In our research, we propose to investigate dataset artifacts in natural language inference using a hypothesis-only SNLI dataset baseline. We will exclude all premise sentences from the dataset and only utilize hypothesis sentences for training and evaluation. To do this, the datasets.load dataset function from the datasets package will be used to load the SNLI dataset. Then, we will split the dataset into train and test sets and eliminate the premise sentences from the training and testing sets, leaving just the hypothesis sentences. Subsequently, the hypothesis-only training set will be employed to train the ELECTRA-small pre-trained model. We use the AutoModelForSequenceClassification class to fine-tune the ELECTRA-small model.

Once the model is trained, we will evaluate its performance on the hypothesis-only testing set. We will also compare the performance of the hypothesis-only model to a model trained on the full SNLI dataset, with both premise and hypothesis sentences, to see how the removal of the premise sentences affects the model’s performance. This approach aims to explore the potential artifacts and biases in the SNLI dataset by using a hypothesis-only baseline and to evaluate the performance of the ELECTRA-small model on this modified dataset.

Behavioral Test

To evaluate the efficacy of a model, we employ a suite of pre-defined tests as analytical metrics, and we’ve adopted the CheckList set from

The INV test requires the model’s prediction to be steady despite the introduction of input perturbations, e.g. name entity recognition (NER) testing. For example, the NER capability for Sentiment modifies place names using specific perturbation functions. The results of this test are crucial for measuring the robustness of a model.

The DIR test is comparable to the INV test, however the label is anticipated to differ in a particular way. For instance, it is not expected that the sentiment of a sentence will become more positive if “I dread you.” is added at the end of a comment “@JetBlue why won’t YOU help them?! Ugh.”

The CheckList set approach provides a standardized mechanism for assessing the capabilities and behavior of NLP models. These analytic metrics give particular checks for many areas of a NLP model’s performance, enabling a more thorough evaluation.

Adversarial Challenge

On our approach, we offer adversarial challenge as a second way for analyzing artifacts. In particular, we employ the Textattack framework to produce adversarial instances to attack our trained models using SNLI datasets in order to discover biases and spurious correlations

Dataset Artifacts Mitigation

We propose a data augmentation strategy to mitigate the model’s dataet artifacts based on the findings from the previous section. On our SNLI dataset, we employ two different scales of data augmentation approaches. The first is based on the CheckListing set

Another method we implemented is the word-level augmentation, WordNet list

To prevent overlapping augmentation from the checkList set, we simply employ the synonym rule from the wordNet in our implementation. We scan each hypothesis phrase and produce two additional sentences by replacing certain synonym terms according to wordNet rules, while the premise corpus remains unchanged. This perturbation preserves the original dataset’s label, as we only substitute synonym occurrences from each hypothesis sentence, which should not affect the original dataset’s sentiment.

Experiments

Stylistic Pattern from Crowdsourcing Generated Dataset

Surprisingly, our model ablation research demonstrates that the hypothesis alone model achieves 69.76\% accuracy on the SNLI dataset (Table 3). However, the results of a hypothesis-only model should not be so impressive. As a result of the stylistic pattern established by crowdsourcing, the dataset contains a high number of words that resemble the premise, indicating that the information in the hypothesis is redundant.

| Experiment | Accuracy | Dataset |

|---|---|---|

|

Hypothesis-Only Hypothesis-Premise |

69.76 89.20 |

SNLI |

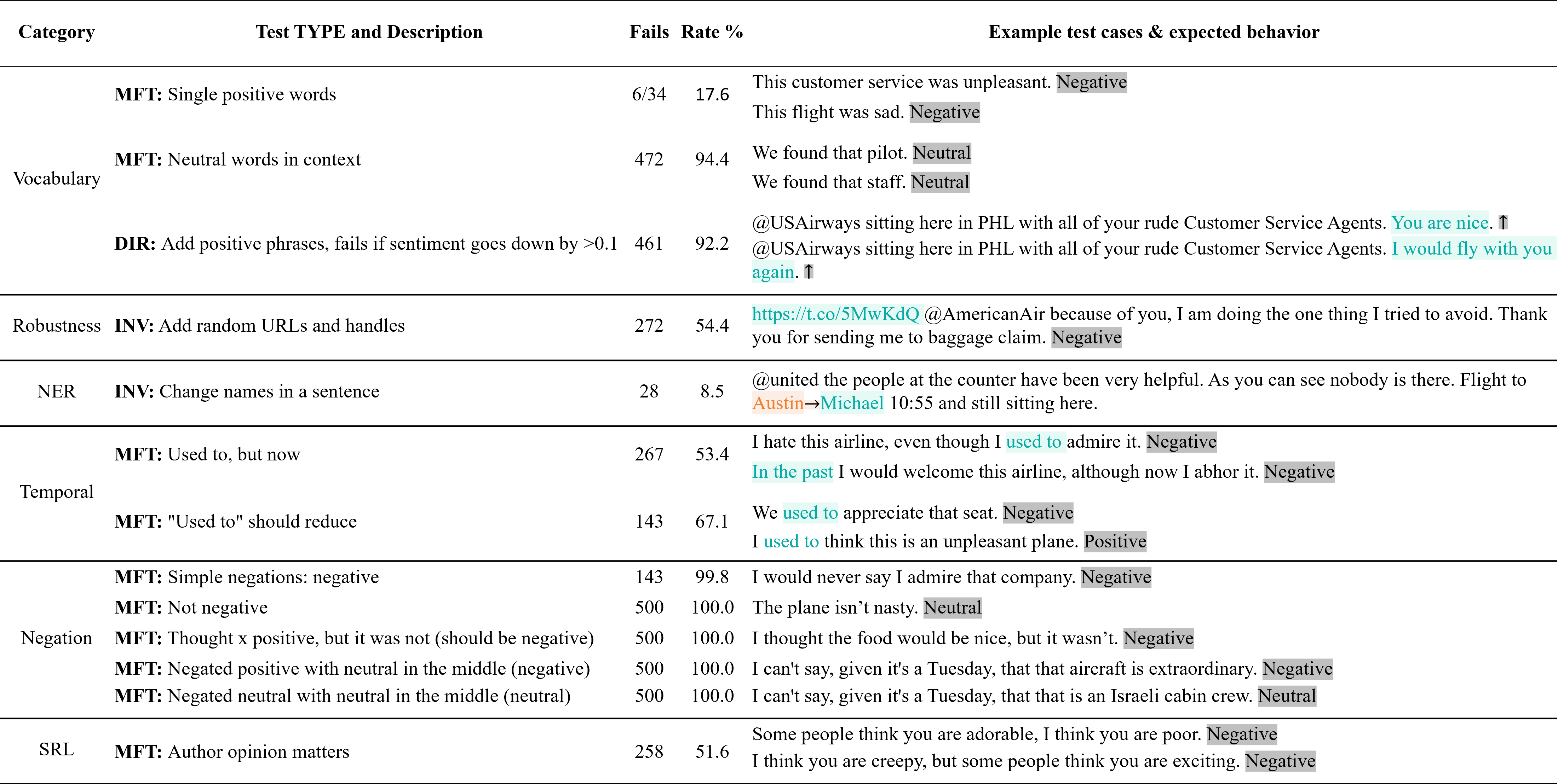

Figure 2 shows that the pre-trained, finely-tuned ELECTRA-small model exhibits less-than-ideal performance across a variety of predefined tests, as determined by the checklisting tests. The examination of dataset artifacts indicates that the sentiment analysis model performs well in certain tests, including the vocabulary test with single positive words, where the failure rate was only 17.6%, and the NER test of changing names in sentences, where the failure rate was only 8.5%. However, when submitted to a robustness test that included random URLs and handles, the model failed 54.4% of the time. This suggests that the model can detect sentences with a positive sentiment well, but poorly with a negative ones.

The model fail with a 99.8% rate on tests involving basic negations and a 94.4% rate on tests using neutral terms in context. The model also fail 100% of tests that required more sophisticated negations, such as the test in which positive words were negated and neutral terms were sandwiched in the center.

The model failed with a failure rate of 53.4% when evaluated with temporal phrases such as “used to” and “but now.”. This raises concerns that the model has trouble understand context and adapting to shifting sentiments.

Performance on SNLI Dataset

Following the addition of more data, the model’s accuracy increases to 89.79 percent, a 0.59 percent improvement over the fine-tuned baseline model (Table 4). However, the multi-scale data augmentation does help with artifacts by reducing the failure rate for vocab+POS and negation tests, both of which use predominantly positive and neutral pairings (Figure 3). “MFT: Thought x positive, but it was not (should be negative)” and “MFT: Not negative” have seen the greatest falure rate reductions (-94.2 and -85.7 percentage points, respectively).

| Dataset | Accuracy | Data Augmentation |

|---|---|---|

| SNLI | 89.20 89.79 |

No CheckList and WordNet |

However, when the data augmentation does not cover all the dataset artifacts in the SNLI dataset, most of the negative challenges remain and the failure rate even increase, such as “INV: Change names in a sentence” which reach up to +11.1% more compared to the model before data augmentation.

Conclusions and Future Work

To address this issue, we introduce a hybrid approach to identifying and mitigating SNLI dataset artifacts by combining a fine-tuned, pre-trained ELECTRA-small model. On the augmented SNLI dataset, its performance is superior to that of the baseline models. Yet there is a great deal of room for development. The first issue is dealing with the SNLI dataset, which has a heavily created pattern due to the crowdsourcing attempt, and finding the artifact with the biggest influence on the dataset. The second fureture work can addresse how to optimize the architecture beyond the ELECTRA model. Contrastive learning among original and enhanced instances can be the foundation for enhancing performance based on a contrastive estimated loss, which can reduce the loss among instance bundles within the same premise group.

arxiv

@misc{lu2022multiscales,

title={Multi-Scales Data Augmentation Approach In Natural Language Inference For Artifacts Mitigation And Pre-Trained Model Optimization},

author={Zhenyuan Lu},

year={2022},

eprint={2212.08756},

archivePrefix={arXiv},

primaryClass={cs.CL}

}